How To Ensure Data Freshness in Web Scraping

July 10, 2025

When it comes to web scraping, keeping your data up-to-date is non-negotiable. Outdated information leads to poor decisions, lost revenue, and even customer trust issues. Businesses that prioritize timely data are 23 times more likely to acquire customers and 19 times more likely to boost profits. Here's how you can stay ahead:

- Automate Data Collection: Use tools like Selenium or Scrapy to handle dynamic content and schedule scraping at intervals that match your business needs.

- Monitor Data Age: Add timestamps to track when data was last updated and set alerts for stale information.

- Optimize Accuracy: Validate fields, remove duplicates, and standardize formats to ensure reliability.

- Adapt Scraping Frequency: Scrape real-time for fast-changing data like prices, daily for inventory, and weekly for reviews.

Tools like ShoppingScraper simplify this process by automating updates, tracking changes, and offering real-time insights. With e-commerce sales expected to hit $8.1 trillion by 2026, ensuring your data is current is critical to staying competitive.

Setting Up Reliable Data Collection

Having a dependable data collection system ensures your information stays current and accurate. Building this framework involves selecting the right tools, using effective collection methods, and scheduling updates that align with how often your data changes.

Effective Methods for E-commerce Data Scraping

Automated web scrapers and crawlers are essential for collecting data efficiently. These tools can gather information from competitor websites and other sources in real time, eliminating the need for time-consuming manual processes. Your choice of scraping method depends on the complexity of the target site and the type of data you need.

For websites that rely heavily on JavaScript, specialized tools are necessary. Many e-commerce platforms use dynamic content loading, so your scraper must handle JavaScript and pagination effectively. Tools like Selenium are great for automating browser interactions with complex sites, while Scrapy provides a flexible, Python-based solution for both crawling and API-based data extraction.

To minimize disruptions caused by layout changes, focus on stable HTML elements and use CSS selectors or XPath expressions for targeting.

Adding features like proxy rotation and rate limiting can also improve your data collection process. Rotating IP addresses and pacing requests help avoid detection and blocking, especially during high-traffic periods.

Adaptive systems can make your scraping setup even more reliable. For instance, automated change detection can alert developers when a website's HTML structure changes, allowing for quick script updates. Machine learning models can also assist by automatically adjusting selectors and XPath expressions when layout changes are detected.

A distributed scraping architecture adds another layer of reliability. By using servers in different regions, you can balance the workload and reduce the risk of downtime caused by a single point of failure.

Once your tools and strategies are in place, the next step is determining how often to scrape each type of data.

How Often to Scrape Different Types of Data

The frequency of data scraping depends on the type of data and its relevance to your business decisions. Pricing data, for example, requires the most frequent updates because prices in competitive markets can change quickly. Many successful e-commerce businesses scrape pricing data in real time to stay ahead of the competition.

Inventory levels typically need to be monitored daily or weekly, depending on the industry. Fast-moving goods may require more frequent updates than slower-moving specialty items. Keeping an eye on inventory helps you spot supply chain issues or competitor stockouts, which could open up new opportunities.

Customer reviews and ratings usually grow at a slower pace, so weekly or monthly updates are often sufficient. However, during product launches or promotional events, when reviews may spike, more frequent monitoring might be necessary.

Product specifications and descriptions change less frequently, so monthly or quarterly updates are generally enough. However, it's a good idea to pay closer attention during new product launches or seasonal catalog updates, as these are times when changes are more likely.

| Data Type | Frequency | Business Impact |

|---|---|---|

| Pricing Data | Daily/Real-time | High – Direct revenue impact |

| Inventory Levels | Daily/Weekly | Medium – Stock planning |

| Customer Reviews | Weekly/Monthly | Medium – Brand monitoring |

| Product Specifications | Monthly/Quarterly | Low – Catalog updates |

Your scraping schedule should also reflect the demands of your industry. Businesses that rely on real-time data - like those adjusting prices or managing inventory - need constant monitoring to avoid revenue losses. On the other hand, market research or compliance-focused tasks might only need periodic updates, while strategic planning can benefit from analyzing historical trends and sentiment data.

Start with a cautious scraping frequency, such as one request every 1–2 seconds, and gradually increase it while monitoring how the website responds. Always review the site's terms of service and robots.txt file to ensure your data collection practices are ethical and compliant.

With the e-commerce market projected to hit $8.1 trillion by 2026, having a dependable and adaptable data collection system is more important than ever. Tailor your scraping frequency to your business's unique needs, the complexity of the data, and any relevant compliance rules.

Automating Updates and Tracking Data Age

Keeping thousands of product listings updated manually is a daunting task. Automation not only simplifies this process but also ensures your data stays current and reliable.

Setting Up Automatic Scraping Schedules

For automating data collection, cron jobs are a solid choice. These time-based schedulers work on Unix-like systems and allow you to run scraping scripts at set intervals. For example, a cron job with 0 */6 * * * will execute every six hours - perfect for tracking frequent price updates or inventory changes.

If you're looking for more reliability, cloud-based scheduling is worth considering. Platforms like GitHub Actions, AWS Lambda, and Google Cloud Functions take the burden off your local machine. They ensure your scrapers run without interruptions caused by downtime or hardware issues. Plus, these services can scale to handle larger datasets as your needs grow.

Another option is using scraping APIs with built-in orchestration features. These APIs let you automate scraping at custom intervals without worrying about the underlying infrastructure.

The frequency of your scraping should align with your business needs. For instance, a retailer focused on price competition might scrape hourly for real-time updates, while others tracking inventory could opt for a daily schedule. To ensure smooth operations, include error handling, retry logic, and rate limiting in your automation setup. These measures help avoid interruptions and keep the data flowing seamlessly.

Once you've automated your scraping tasks, the next step is to monitor how fresh your data is.

Adding Timestamps and Stale Data Alerts

Tracking the age of your data is crucial, and timestamps are your best friend here. Each piece of data you collect should include metadata showing when it was scraped, processed, and last verified. This allows you to quickly spot outdated datasets that need refreshing.

Storing these timestamps in a database can make tracking even easier. Use standardized formats like UTC and create separate fields such as "collected_at" and "checked_at" to distinguish between when data was initially gathered and when it was last confirmed as accurate. Indexing these fields can also speed up queries.

To stay ahead of changes, implement change detection systems. These tools monitor your target pages for updates and flag modifications, enabling you to focus your scraping efforts where they're needed most.

You can also set up automated alerts to notify your team when data becomes stale or when key changes occur. For example, you might configure alerts to trigger when data hasn't been updated within a specific time frame. Notifications can be delivered via email, Slack, or webhook integrations, keeping everyone informed.

Finally, consider monitoring the DOM (Document Object Model) to catch layout changes that could disrupt your scrapers. Use status indicators like "new", "same", "changed", or "removed" to track updates and maintain accuracy in your data collection process.

Maintaining Data Quality and Accuracy

Real-time scraping and automation can keep your data updated, but ensuring its quality is just as important. Accurate and reliable data is the backbone of actionable insights. Without it, even the most sophisticated scraping setups can fall short. Poor data quality is not just a minor inconvenience - it can cost businesses an estimated $15 million annually due to bad product data. That’s why validation and cleaning are critical components of any data scraping pipeline.

Checking Data and Finding Errors

To maintain high-quality data, it’s essential to validate what you scrape. Start by applying field validation rules that check data types and formats against predefined standards. For example, in e-commerce data, you might verify that prices include valid currency symbols, product names are free of HTML tags, and availability statuses are consistent, such as "In Stock" or "Out of Stock."

Enforcing schemas early in the process ensures that scraped data meets strict quality benchmarks before it enters your database. For instance, when collecting product prices, confirm that the price field contains numeric values with correct decimal formatting and falls within a reasonable range.

Cross-referencing your data with trusted sources can further improve accuracy. For example, validating stock prices against a reliable financial news website is a common practice. This approach not only ensures accuracy but also builds trust in your data - a critical factor for effective decision-making.

To catch errors like spelling mistakes, missing values, duplicates, and outliers, set up automated checks. These systems can flag issues early, allowing you to address them before they impact your analysis. Regularly auditing your scraping algorithms is also vital, especially as websites frequently update their layouts. These changes can disrupt data collection, so monitoring and promptly fixing your scraping processes is key.

| Issue | Impact on Data Accuracy | Solution |

|---|---|---|

| Duplicate Data | Skews insights and inflates records | AI deduplication |

| Missing Values | Leads to incomplete analysis | AI-powered imputation |

| Erroneous Data | Reduces reliability | Outlier detection & correction |

| Inconsistent Formats | Disrupts processing | Standardization techniques |

Once errors are detected and corrected, the next step is to standardize your data for consistency across the board.

Removing Duplicates and Standardizing Formats

After addressing errors, eliminating duplicates and ensuring standardized formats can significantly improve data reliability. Duplicate data can distort insights and inflate record counts, so it’s crucial to identify and remove duplicates before analysis.

The method for handling duplicates depends on your tools and setup. For Python users, leveraging sets instead of lists during scraping can automatically prevent duplicate entries. Once the data is clean, you can convert the set back to a list for storage. For larger-scale operations, storing data in a database and checking for existing entries before adding new ones is an effective way to handle duplicates. Alternatively, you can scrape all data first and remove duplicates afterward - an approach often used in time-sensitive scenarios like price monitoring.

Equally important is data standardization. This involves cleaning and harmonizing your data to eliminate inconsistencies. For US-based e-commerce data, this might mean ensuring all prices include the dollar sign ($) with proper decimal formatting, dates follow the MM/DD/YYYY format, and measurements use imperial units. Standardizing terminology and naming conventions across sources is another critical step. For instance, normalizing product categories like "Electronics" and "Consumer Electronics" into a single term can simplify analysis and comparisons.

To manage large datasets from multiple sources, consider using data warehousing techniques. Centralized storage not only streamlines analysis and reporting but also ensures consistent formatting across all datasets.

Real-world examples show the impact of proper duplicate handling. In the food and beverage industry, Product Information Management (PIM) systems can automatically detect and correct duplicate listings of the same product with different nutritional details, ensuring compliance and reducing confusion. Similarly, in the automotive sector, automated tools can identify duplicate entries for parts with similar specifications but different part numbers. This allows teams to review and correct discrepancies, improving catalog accuracy and customer satisfaction.

sbb-itb-65e392b

Using ShoppingScraper for Real-Time Data Updates

Keeping your data current is crucial, and ShoppingScraper takes the hassle out of it with automated freshness checks. By eliminating the need for constant manual updates, the platform ensures your data stays up-to-date without extra effort.

At the core of ShoppingScraper's approach is EAN-level data collection, which tracks products in detail - including variants, colors, and sizes. This granular method ensures no detail is missed, offering a comprehensive view of pricing and availability.

"The data comes from Google Shopping, where we collect pricing data on an EAN-level. This means we capture every variant, color, and size - updated throughout the day." - Job van der Geest, Marketing Intelligence VML Netherlands

These automated tools lay the groundwork for the platform’s standout features.

ShoppingScraper Features That Keep Data Current

ShoppingScraper is designed to deliver accurate, up-to-the-minute data through several standout features. Its RESTful API responds to most requests in under 4 seconds, with recent updates improving speed by 25% while maintaining a reliable 99% uptime.

The platform also offers flexible scheduling options, allowing you to set data collection intervals - hourly, daily, or weekly - depending on your needs. For instance, hourly updates are ideal for monitoring flash sales, while weekly updates may suffice for slower-moving products.

Integration is seamless, thanks to multiple options that keep your workflows efficient without sacrificing data accuracy. Advanced matching algorithms combine EAN/GTIN data with title and URL matching to ensure precise product identification across platforms. This minimizes errors like misidentified or duplicated products. ShoppingScraper supports major marketplaces such as Google Shopping, Amazon, bol.com, and Coolblue, with data exportable in JSON or CSV formats.

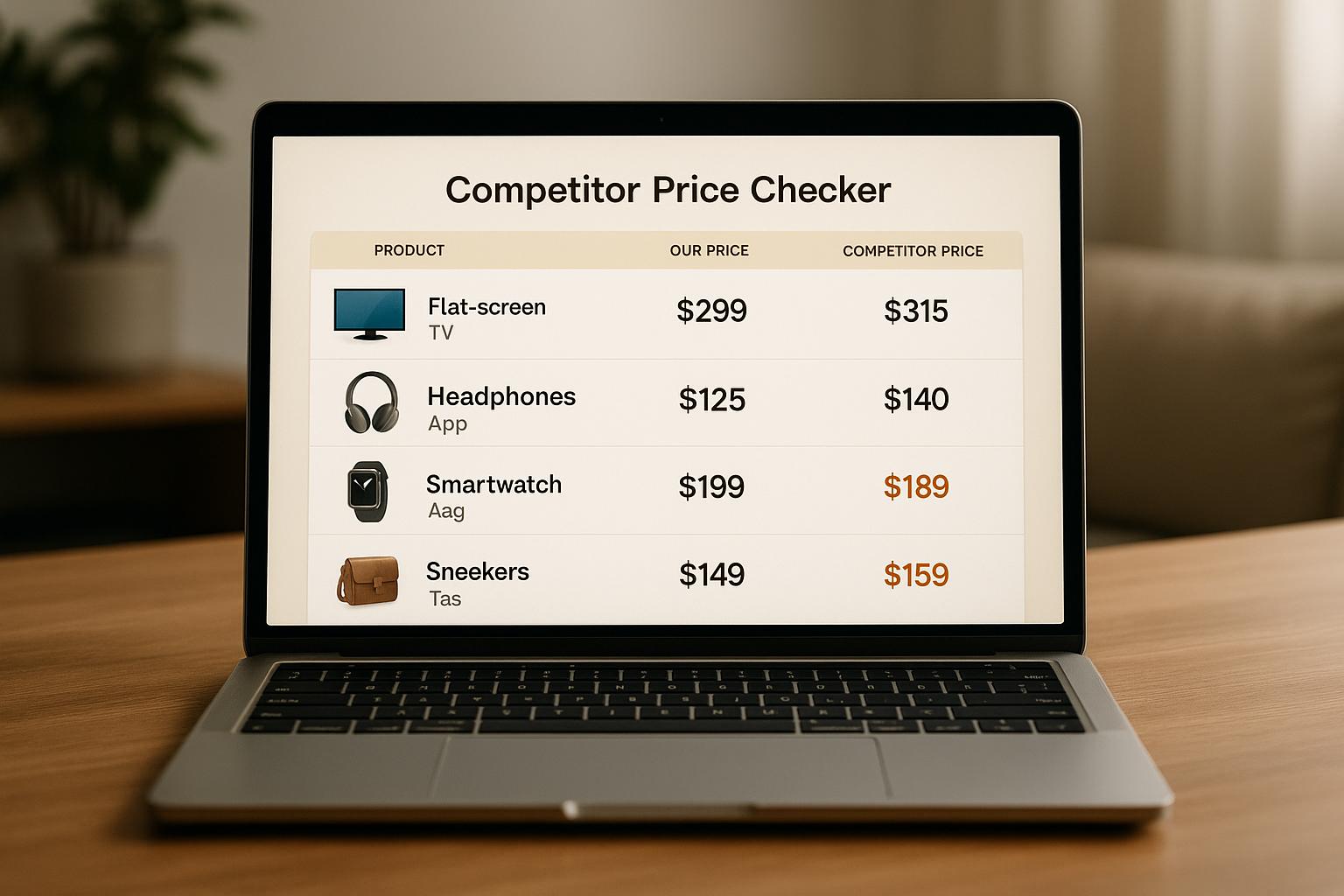

Example: Tracking Competitor Prices in Real-Time

These features are game-changers for businesses aiming to stay competitive. ShoppingScraper empowers users with real-time insights that can drive pricing strategies and other business decisions.

"ShoppingScraper supports us in making better decisions for our clients. The data accuracy in combination with the fast way to extract it to our own platforms makes ShoppingScraper a valuable partner." - Koen van den Eeden, OMG Transact Managing Director

For agencies juggling multiple clients, the platform’s ability to gather large volumes of relevant data is a major asset. This data feeds directly into their systems, giving specialists the insights they need to optimize advertising, pricing, and inventory management.

"ShoppingScraper has been a key asset for our agency. With the support of ShoppingScraper we are able to collect massive quantities of relevant data, which feeds our systems, and provides our specialists with data-driven insights that help them make better decisions about advertising, optimization, pricing and inventory management, leading to increased sales and profits." - Toon Hendrikx, Founder & CEO 10XCREW

The platform shines in price monitoring. When competitors adjust prices throughout the day, ShoppingScraper’s continuous updates ensure you have actionable, real-time data - essential in fast-moving markets.

Users frequently highlight the platform’s reliability, with reviews on SoftwareAdvice.com and GetApp.com rating it 4.8/5. Its user-friendly design, dependable API, and responsive customer support have made it a go-to solution for businesses needing fresh e-commerce data.

Getting started is easy with a 14-day free trial, which includes 100 credits. Additional requests can be purchased in blocks of 10,000, with pricing starting at $29 per block for the Advanced plan and $15 per block for the Enterprise plan.

Conclusion: Fresh Data Powers E-commerce Success

Keeping data current is more than a technical necessity - it’s the backbone of thriving in the fast-paced world of e-commerce. The strategies outlined here, from dependable data collection to automated updates and rigorous quality checks, work together to ensure your business operates with accurate and timely information.

The stakes are high. Poor data quality costs organizations an average of $15 million annually. In e-commerce specifically, about 30% of online shoppers abandon their carts because of incorrect product data. These figures make it clear: prioritizing data freshness isn’t optional - it’s essential.

But the benefits go well beyond avoiding losses. Companies that focus on keeping their data fresh can react faster to market shifts, connect with customers more effectively, and outpace competitors. With 63% of organizations citing data analytics as the most impactful factor in decision-making, having up-to-date data is crucial for staying ahead.

Real-time processing, automated updates, and strict quality control are the pillars of successful data management. Tools like ShoppingScraper bring these strategies to life, offering API integration and automated scheduling to keep your data accurate and actionable.

The numbers speak for themselves: global e-commerce sales are expected to surpass $4.1 trillion by 2024, and by 2040, an estimated 95% of purchases will happen online. In this rapidly changing environment, data freshness isn’t just a competitive edge - it’s a matter of survival. Businesses that adopt robust data freshness practices today will be well-positioned to thrive in tomorrow’s digital marketplace.

"By ensuring that data is up-to-date, organizations are better positioned to navigate through a dynamic landscape, adapt to changes, make informed decisions, and derive valuable insights from their data ecosystems." – Atlan Experts

Investing in fresh data doesn’t just improve decision-making and reduce costs - it transforms customer experiences. As the digital economy continues to grow, the companies that succeed will be those that treat data freshness as a strategic priority, not just a technical checkbox.

FAQs

How do I decide how often to scrape different types of data for my business?

When deciding how often to scrape data, the frequency largely depends on how frequently the information changes. For dynamic data - like pricing or stock availability - scraping in real-time or at short intervals is essential to keep your information accurate and competitive. On the other hand, static data - such as product descriptions or customer reviews - can often be scraped less frequently, like daily or even weekly.

To keep your data up-to-date without putting unnecessary strain on target websites, it's important to regularly assess how often the data you're monitoring gets updated. Adjust your scraping schedule based on these patterns. Finding the right balance ensures you stay informed while minimizing risks like IP bans.

What are the best practices for ensuring accurate and reliable data when web scraping?

To gather precise and trustworthy data through web scraping, it's essential to adopt a few best practices. Start by setting up automated validation checks to identify anomalies or inconsistencies in your data. Pair this with well-defined error management processes to tackle any issues promptly. Regularly reviewing your scraped data is also crucial to ensure it meets your quality standards.

Using tools with features like real-time monitoring and automated scheduling can go a long way in keeping your data accurate and up-to-date. You might also explore platforms such as ShoppingScraper, which provide options like API integration and structured data exports, making data collection smoother and more dependable.

How can tools like ShoppingScraper help ensure e-commerce data stays accurate and up-to-date?

Automation tools such as ShoppingScraper simplify the process of maintaining accurate and up-to-date e-commerce data by providing real-time scraping features. These tools automatically gather details like pricing, product specifications, and seller information from various platforms, ensuring your data always mirrors the latest updates.

With options like automated scheduling, API integration, and data export in JSON or CSV formats, ShoppingScraper minimizes manual work, enhances precision, and saves valuable time. This makes it easier for businesses to track prices, evaluate competitors, and stay competitive in a rapidly evolving market.

Related Blog Posts