How to Extract Product Data from Google Shopping: Step-by-Step

January 6, 2025

Want to gather product data from Google Shopping? Here's how you can do it quickly and effectively while staying compliant with legal guidelines. This guide covers everything from tools to advanced techniques.

Key Takeaways:

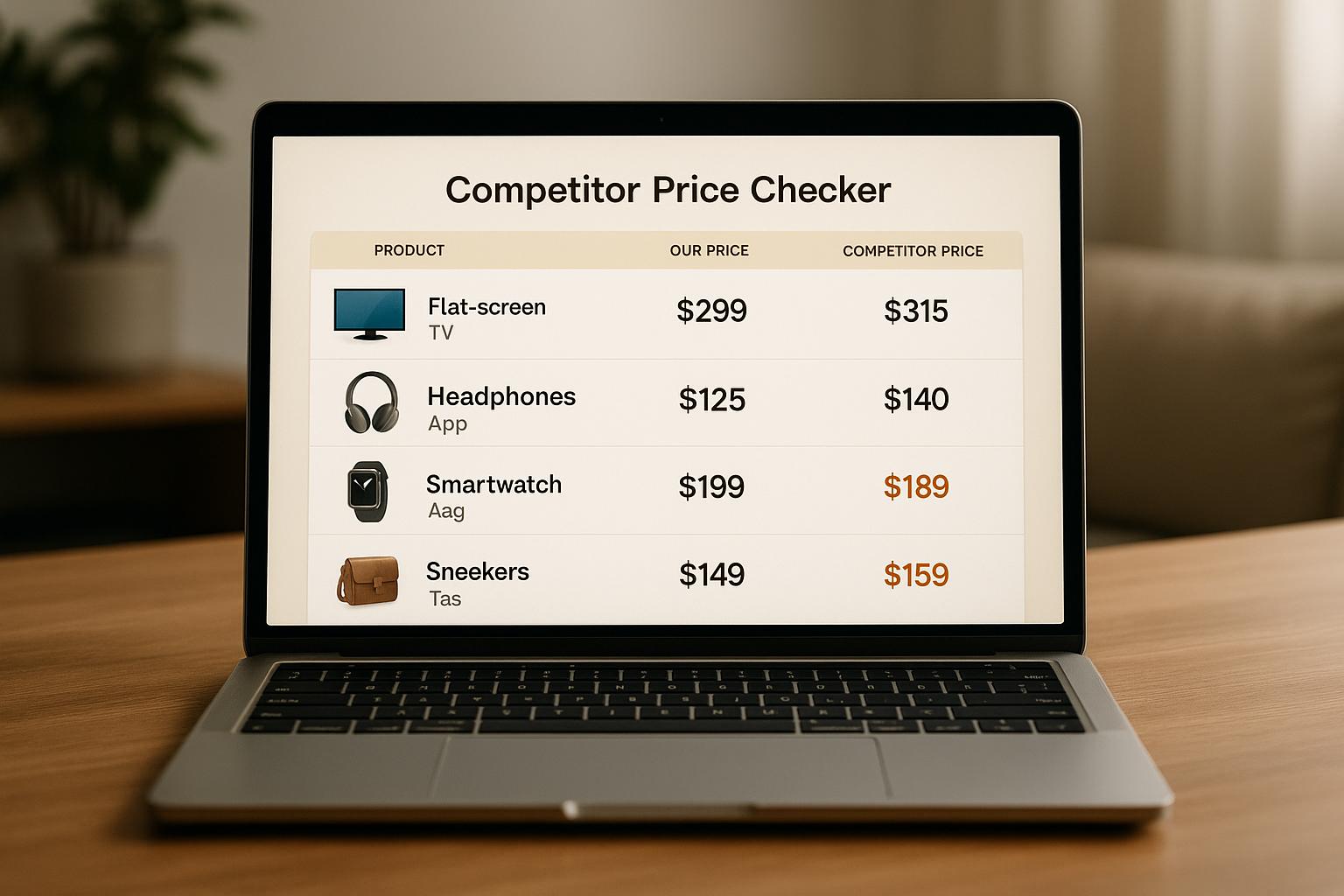

- Why Extract Data? Monitor competitor pricing, track product availability, and analyze trends.

- Tools to Use: APIs like ShoppingScraper, BeautifulSoup for parsing, and proxy services like Crawlbase.

- Compliance Tips: Respect rate limits, collect only public data, and follow platform rules.

- Step-by-Step Process: Set up Python, use APIs for data collection, and organize data in JSON or CSV formats.

- Advanced Techniques: Use residential proxies to avoid IP blocks and customize data extraction with Zenserp parameters.

By the end, you'll know how to extract and manage Google Shopping data efficiently while staying within legal boundaries.

Preparation for Google Shopping Data Scraping

Getting ready before extracting data from Google Shopping is crucial. A solid setup helps reduce mistakes and ensures better data quality.

Tools and Technologies Needed

To scrape data efficiently you can use the Google ShoppingScraper API that handles HTTP requests for retrieving data. It offers API integration and automated scheduling, making the process smoother. Once you have the right tools, it's equally important to stick to ethical and legal practices.

Or you can do it yourself using:

- BeautifulSoup: A Python library for parsing HTML and extracting specific elements.

- Proxy services like Crawlbase: Prevents IP blocks and ensures uninterrupted scraping.

Ethical and Legal Guidelines

Having the tools is just one part of the equation. It's essential to ensure your methods comply with ethical and legal standards.

In the U.S., scraping public data is allowed, but you must follow specific rules to stay on the right side of the law. Here's what to keep in mind:

- Respect rate limits: Avoid overwhelming the platform with too many requests.

- Steer clear of sensitive data: Only collect publicly available information.

- Be transparent: Document your methods and practices clearly.

These steps not only help you stay compliant but also ensure responsible data collection. Keep an eye on platform policies and update your practices as needed [1][2].

Steps to Extract Google Shopping Data

Extracting data from Google Shopping requires a methodical approach and the right tools. Follow these steps to gather accurate product information.

Setting Up Your Environment

First, ensure you have Python 3.8 or higher installed. Create a virtual environment to keep dependencies organized:

python -m venv shopping_scraper

source shopping_scraper/bin/activate # For Unix/MacOS

shopping_scraper\Scripts\activate # For Windows

With your environment ready, you can move on to using APIs for efficient data collection.

Using APIs for Data Collection

The Crawlbase API is a popular choice for accessing Google Shopping data. Their Growth plan, priced at €199/month, allows up to 50,000 requests and supports multiple data formats [2].

Here’s a quick example of how to fetch product data using the API:

import requests

API_KEY = 'your_api_key'

url = 'https://api.crawlbase.com/shopping?url=your_target_url' # Replace with the Google Shopping URL you want to scrape

response = requests.get(url, params={'token': API_KEY})

product_data = response.json()

Once you retrieve the data, the next step is to structure and organize it.

Data Extraction and Management

To ensure complete and clean data collection, filter by attributes like price or category, handle pagination, and export the results in formats like JSON or CSV.

Here’s an example using BeautifulSoup to parse product details:

from bs4 import BeautifulSoup

# Function to parse HTML and extract product details like title, price, and seller

def extract_product_details(html_content):

soup = BeautifulSoup(html_content, 'html.parser')

products = []

for item in soup.find_all('div', class_='product-item'):

product = {

'title': item.find('h3').text.strip(),

'price': item.find('span', class_='price').text.strip(),

'seller': item.find('div', class_='seller').text.strip()

}

products.append(product)

return products

sbb-itb-65e392b

Advanced Methods for Data Extraction

Once you’ve got the basics of data extraction down, these advanced techniques can help you improve both efficiency and accuracy.

Using Residential Proxies to Avoid IP Blocks

Residential proxies use real user IPs assigned by ISPs, making your scraping activities look legitimate. This reduces the chances of getting blocked. Here's an example of how to use Bright Data's proxy service:

proxy_url = "http://username:password@brd.superproxy.io:22225"

proxies = {'http': proxy_url, 'https': proxy_url}

response = requests.get('https://shopping.google.com/search?q=laptop', proxies=proxies)

The response variable will hold the HTML content of the page, which you can parse with tools like BeautifulSoup to extract product details.

Proxies like these ensure uninterrupted access to platforms like Google Shopping, giving you more flexibility in customizing your data extraction.

Using ShoppingScraper API for data extraction

The ShoppingScraper API provides robust functionality for extracting product data from Google Shopping. Here's how to implement it effectively:

Core implementation

def get_shopping_data(api_key, ean):

base_url = "https://api.shoppingscraper.com"

# Get product offers

def fetch_offers():

endpoint = f"{base_url}/offers"

params = {

"site": "shopping.google.nl",

"ean": ean,

"api_key": api_key

}

return requests.get(endpoint, params=params).json()

# Get product details

def fetch_product_info():

endpoint = f"{base_url}/info"

params = {

"site": "shopping.google.nl",

"ean": ean,

"api_key": api_key,

"use_cache": True

}

return requests.get(endpoint, params=params).json()

return {

"offers": fetch_offers(),

"product_info": fetch_product_info()

}

ShoppingScraper’s Advanced plan offers features like automated scheduling and advanced filtering, making it a great choice for tasks like price tracking or analyzing multiple marketplaces.

To manage connection issues, you can use a retry mechanism with progressive delays between attempts:

def retry_request(func, max_attempts=3):

for attempt in range(max_attempts):

try:

response = func()

if response.status_code == 200:

return response.json()

except Exception as e:

if attempt == max_attempts - 1:

raise e

time.sleep(2 ** attempt)

return None

This function retries up to three times, with longer delays for each attempt, to handle issues like network interruptions or rate limits [1][2].

Conclusion and Key Points

Overview of the Extraction Process

Extracting data from Google Shopping requires a mix of technical expertise and adherence to ethical guidelines. Tools such as ShoppingScraper offer dependable solutions for accessing product data while following platform rules. Using these tools alongside residential proxies helps reduce the risk of IP blocks, especially during large-scale operations.

Choosing structured formats like JSON or CSV ensures the data remains organized and easier to analyze. For instance, the Zenserp API's built-in JSON formatting simplifies the process of parsing and storing product details.

While these methods work well today, staying effective means keeping up with changes in both technology and regulations.

Future Updates and Compliance

The methods and rules for extracting data from Google Shopping are constantly changing. By January 2025, businesses should focus on the following:

| Aspect | Current Requirements | Future Considerations |

|---|---|---|

| Data Access | Use authorized APIs and tools | Integration with official APIs |

| Rate Limiting | Employ progressive delays | Implement queue management |

| Legal Compliance | Follow platform rules | Adhere to stricter regulations |

| Proxy Management | Rotate residential proxies | Leverage AI for optimization |

To keep operations running smoothly:

- Stay Informed: Regularly check Google Shopping's documentation for updates.

- Validate Your Data: Ensure the data is accurate and complete before using it.

- Adopt New Technologies: AI and machine learning are becoming essential for improving efficiency in data extraction.

As tools like ShoppingScraper continue to improve, combining them with forward-thinking practices will be crucial for staying both efficient and compliant. By blending technical know-how with a focus on legal standards, businesses can ensure their data extraction strategies remain effective and future-ready [3][4].

FAQs

Is it legal to scrape Google Shopping?

Scraping publicly available data, like Google Shopping, is generally allowed as long as it complies with laws like the Computer Fraud and Abuse Act (CFAA) in the U.S. and doesn’t breach terms of service. Staying within legal boundaries requires attention to a few key points:

- Follow the platform’s terms of service.

- Handle data responsibly, ensuring privacy compliance.

- Use proper rate limiting to avoid overloading servers.

- Stick to authorized methods for data collection.

Services like ShoppingScraper and Crawlbase offer tools to extract data in a structured and compliant way, helping minimize legal risks while respecting platform rules [2][3]. Using APIs and proxies, as mentioned earlier, is essential for efficient and lawful data scraping.

To stay compliant, it’s important to regularly check platform policies, use approved tools, and adopt responsible practices like rate limiting and progressive delays. Residential proxies can also help avoid technical blocks while maintaining compliance [3].

Although tools and methods exist to ensure legal data scraping, it’s wise to consult legal experts for specific situations. This is especially important as laws and platform policies continue to change through 2025 and beyond [2]. By following these practices, businesses can safely collect data while staying within legal and ethical boundaries - critical for long-term success in e-commerce.

Related Blog Posts