Common Errors in Dynamic Website Scraping

September 15, 2025

Dynamic website scraping is challenging because these sites load content using JavaScript or AJAX after the initial page load. Traditional scrapers often fail to handle this, leading to incomplete data. Key issues include:

- Timing Problems: Extracting data before the page fully loads can result in gaps.

- Access Errors: Dynamic URLs, session handling, rate limits, and geographic restrictions can block scrapers.

- Parsing Failures: Sites frequently change layouts or use inconsistent data formats, breaking extraction rules.

- Anti-Scraping Measures: Techniques like CAPTCHAs, IP blocking, and behavioral analysis detect and stop bots.

To overcome these, use tools like Selenium or Playwright for JavaScript rendering, manage proxies for IP rotation, and implement error recovery systems. Ethical practices - like respecting a site's robots.txt and adhering to legal guidelines - are equally important. Automated tools like ShoppingScraper simplify these tasks by handling JavaScript, proxies, and anti-scraping defenses, ensuring smoother and more reliable data collection.

Most Common Dynamic Website Scraping Errors

Scraping dynamic websites, especially e-commerce platforms, comes with its fair share of challenges. These issues often fall into three main categories, each of which can disrupt your data collection efforts. By understanding these common pitfalls, you can create more reliable scraping systems and minimize downtime.

Content Loading and Rendering Problems

One of the biggest hurdles with dynamic websites is timing. Scrapers often attempt to extract data before JavaScript has fully loaded, leading to incomplete datasets. E-commerce sites frequently rely on separate API calls to load crucial details like product information, prices, and images, meaning early data extraction can leave you with gaps in your results.

JavaScript execution failures also pose a significant issue. When your scraping tool struggles to render JavaScript-heavy content, entire sections of data can become inaccessible. This tends to happen on sites that use complex JavaScript frameworks or poorly optimized code.

Another challenge is infinite scroll and lazy loading, which require simulated scrolling to capture all available content. Without proper handling, your scraper might only grab the initial batch of items, missing hundreds - or even thousands - of products that load as the page extends.

HTTP Status and Access Problems

Access-related issues can further complicate the process of scraping dynamic websites.

- Dynamic URL structures: Many e-commerce sites use session tokens or temporary parameters in their URLs. These elements often expire, leading to 404 errors.

- Session management issues: Sites that require user authentication or maintain session states can trigger 403 forbidden errors if you fail to handle cookies or session data properly. Logged-in users, for example, might see exclusive pricing or products unavailable to anonymous visitors.

- Rate limiting and IP blocking: Aggressive scraping can activate anti-bot measures, resulting in 429 or 503 errors. These restrictions are designed to prevent server overload but can completely halt your data collection if triggered.

- Geographic restrictions: Some websites serve different content based on the user's location. This can lead to access denied errors, especially on international platforms with region-specific pricing, product availability, or compliance rules.

Data Parsing and Extraction Failures

Even if you overcome loading and access issues, parsing challenges can still undermine your data collection efforts.

CSS selector instability is a common problem. E-commerce sites frequently update their HTML structures - changing class names, reorganizing layouts, or redesigning product pages. These updates can break your existing extraction rules, leaving your scraper unable to retrieve data.

Inconsistent data formats across product categories also create parsing headaches. For instance, a clothing retailer might list sizes as text ("Small", "Medium", "Large") for apparel but use numbers ("6", "7", "8") for shoes. If your scraper isn’t designed to handle these variations, it could miss key product attributes.

Missing or null values in product data can cause poorly designed scrapers to crash. Some products might lack details like reviews or specifications, so your scraper needs to handle these gaps gracefully to avoid interruptions.

Lastly, dynamic content injection via JavaScript can alter the DOM structure after your initial parsing. This is especially common on sites that personalize content based on user behavior or A/B testing, where the same URL might serve different HTML structures to different visitors. Without adaptable selectors, your scraper could end up targeting the wrong elements entirely.

Anti-Scraping Methods and How to Handle Them

When it comes to data extraction, dealing with anti-scraping measures is one of the biggest hurdles. Many e-commerce sites and other platforms have implemented strategies to protect their data, making the process more challenging. To navigate these defenses effectively - and ethically - you need a thoughtful approach that complies with legal requirements and the specific rules of each site.

Types of Anti-Scraping Methods

Websites use a variety of techniques to detect and block scraping activities. Here are some of the most common ones:

-

Rate Limiting

Sites track how often requests are made from a single IP address. If the frequency exceeds a certain threshold, connections may be blocked. -

IP-Based Blocking

Unusual activity patterns from an IP address can lead to it being flagged and denied access. -

CAPTCHA Challenges

CAPTCHAs are designed to distinguish humans from bots by requiring users to complete simple tests, such as identifying images or entering text. -

User-Agent Filtering

Websites analyze the User-Agent headers in requests. Default or outdated headers, often used by bots, might raise red flags. -

JavaScript Challenges

Some sites require JavaScript to be executed to load content. Basic HTTP clients can’t handle this, making browser simulation necessary. -

Behavioral Analysis

Advanced systems look for human-like behaviors, such as scrolling or clicking, to identify automated activity.

Understanding these methods is the first step toward developing strategies to work around them.

Working Solutions for Anti-Scraping Methods

To counter these defenses, you’ll need to employ specific techniques that make your requests appear more natural and less detectable:

-

Request Throttling

Introducing random delays between requests helps mimic the behavior of a real user and avoid triggering rate limits. -

Proxy Rotation

Using multiple proxies can spread requests across different IP addresses, reducing the likelihood of being blocked. -

Browser Automation Tools

Tools like Selenium or Playwright can simulate an actual browser, making it possible to handle JavaScript challenges and even some CAPTCHAs. Keep in mind, though, that this approach requires more resources. -

Header Randomization

Changing headers, including User-Agent strings, makes your traffic look more like that of a regular browser session. -

Session Management

Maintaining cookies and authentication tokens ensures continuity, making your activity appear as if it’s coming from a consistent user. -

Respecting Robots.txt

While not legally enforceable, following the guidelines outlined in a site's robots.txt file shows respect for the site owner’s preferences and helps maintain a good relationship.

Compliance Considerations

Ethical scraping isn’t just about avoiding detection - it’s about doing the right thing. Always review a website’s terms of service and, whenever possible, use official APIs for data collection. Adhering to rate limits, respecting robots.txt, and following other site-specific rules ensures that your methods remain responsible. This not only minimizes disruptions but also helps maintain the integrity and reliability of the data you collect.

Error Handling, Logging, and Automation Setup

When dealing with the challenges of dynamic website scraping, having a solid error-handling strategy is crucial to keep minor issues from spiraling into major problems. Network timeouts, server errors, and unexpected page changes are just a few of the hurdles you’re bound to encounter. To stay ahead, you need a system that combines detailed logging with automated recovery mechanisms.

Setting Up Detailed Error Logging

Detailed logging is your first safety net when scraping goes awry. It gives you the visibility needed to pinpoint what went wrong and fix it fast.

Your logs should capture every relevant detail, including requests, failures, response times, and contextual data like URLs, timestamps, HTTP statuses, headers, and parameters. Organizing your logs by severity levels - INFO for routine operations, WARNING for recoverable problems, and ERROR for critical failures - can make troubleshooting much more efficient. For example, a successful page load might be logged as INFO, a retry as WARNING, and a complete failure as ERROR.

Using JSON formatting for your logs can take things a step further. Structured logs are easier to search, filter, and analyze, especially when you’re managing large-scale operations with thousands of requests. To keep your logging system manageable, rotate log files regularly and compress older ones. This not only saves storage space but also preserves historical data for identifying patterns and trends.

Automating Error Recovery

Once your logging system is in place, automation becomes the next critical step. When you’re scraping hundreds - or even thousands - of pages, manual intervention just isn’t practical. Automating recovery ensures smoother operations and minimizes downtime.

Start by implementing exponential backoff for retries. Begin with a short delay, like 1 second, and double it with each retry attempt. This method gives temporary server issues time to resolve without overwhelming the server, which might have been the cause of the problem in the first place.

Tailor your retry strategies based on the type of error. For instance:

- A 503 Service Unavailable error might call for aggressive retries, as it’s often a temporary issue.

- A 403 Forbidden error likely means you’ve been blocked; in this case, switching proxies or tactics is a better approach.

- HTTP timeout errors usually benefit from retries, while 404 Not Found errors typically don’t warrant further attempts.

Introduce circuit breakers to pause requests to endpoints that are consistently failing. After a set cooling-off period, you can test the endpoint with a single request to see if it’s back online.

For blocked requests, rotating proxies from a pre-checked, healthy pool can make all the difference. This simple automation can turn hours of downtime into just a few minutes of disruption.

Finally, set up alerts for recurring failures so you’re immediately notified of broader issues. Use queue-based processing with dead letter queues to handle failed requests. When a request exhausts all retry attempts, it should be moved to a separate queue for further analysis or manual processing. This ensures no data is lost while keeping your main pipeline operational and reliable.

sbb-itb-65e392b

How ShoppingScraper Handles Dynamic Website Scraping

Tackling the complexities of dynamic websites often requires advanced technical know-how. ShoppingScraper simplifies this process by automating tasks like rendering dynamic content and managing access restrictions, allowing you to focus on analyzing the data rather than wrestling with the technical challenges of scraping. Here’s how ShoppingScraper addresses common obstacles in e-commerce data extraction.

Built-in Features to Prevent Errors

ShoppingScraper is designed to reduce the likelihood of scraping errors. For example, its automated content rendering eliminates the need to manually handle headless browsers, a common pain point in traditional scraping setups.

The platform also integrates proxy support, which takes care of rotating proxies and performing health checks automatically. This minimizes the risk of blockages and HTTP errors, ensuring smoother data collection.

Another standout feature is its ability to handle anti-scraping measures. Without requiring extra configuration on your part, ShoppingScraper navigates these barriers in the background, letting you focus entirely on gathering and analyzing clean, reliable data.

E-commerce-specific tools further streamline data extraction. These tools are frequently updated to adapt to changes in website layouts, so even when target sites undergo modifications, your data collection remains largely unaffected. With automated scheduling, recurring scraping tasks run seamlessly. Any failed requests are retried automatically, and persistent issues are logged for easy review.

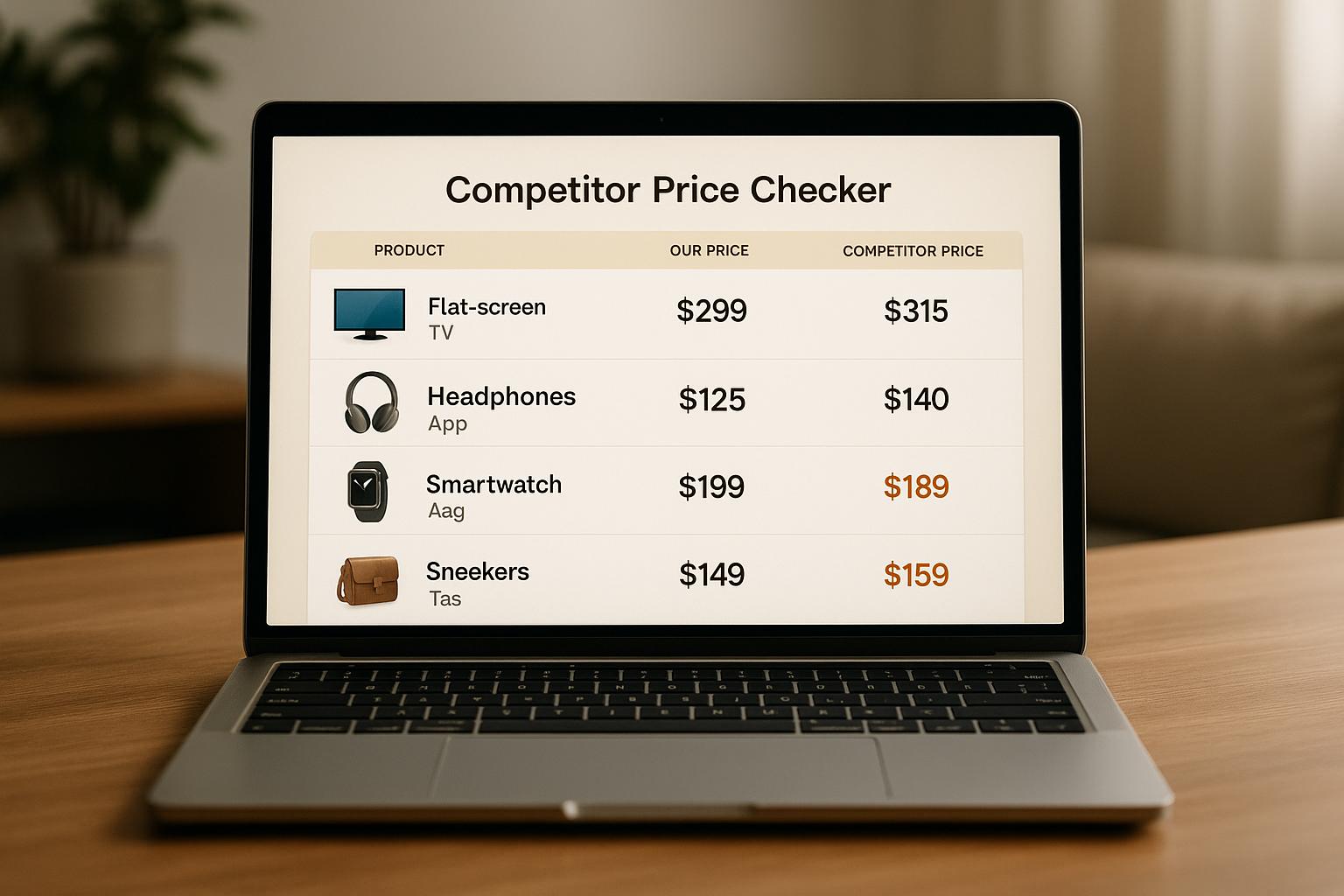

Simplified E-Commerce Data Monitoring

ShoppingScraper doesn’t just prevent errors - it also makes monitoring e-commerce data straightforward and efficient. The platform is fine-tuned for Google Shopping and other major online marketplaces, leveraging its deep understanding of site structures and update patterns to optimize data extraction.

You can access real-time product data through API calls or schedule exports in JSON or CSV formats, eliminating the need for complicated monitoring systems.

With multi-marketplace support, you can track competitors across platforms like Amazon and eBay without juggling separate infrastructures. Consistent API endpoints and standardized data formats make it easier to analyze and report on your findings.

The platform also supports seller data extraction, including merchant ratings and product availability. This ensures you have the context required for effective competitive analysis - all without the hassle of managing multiple disconnected scraping operations.

For businesses with high-volume data needs, the Enterprise plan offers support for over 500,000 monthly requests. Additional capacity is available at $15 per 10,000 requests, making it easier to scale your data collection efforts as needed.

Conclusion: Preventing Dynamic Website Scraping Errors

Scraping dynamic websites comes with its own set of hurdles, from content rendering glitches to anti-scraping defenses and HTTP access issues. These obstacles highlight the importance of having a strong error-handling system in place.

Effective error logging and automated recovery processes are crucial. They help catch and address problems early, preventing them from snowballing into bigger issues. Without these tools, pinpointing the root cause of failures becomes a guessing game, leading to recurring problems and wasted time and resources.

Manual scraping is no longer a viable option for modern e-commerce platforms. Sites like Amazon and Google Shopping use advanced anti-bot technologies, requiring methods like proxy rotation, browser fingerprinting management, and handling dynamic content rendering to navigate their defenses.

With ShoppingScraper's built-in tools for error prevention and anti-scraping, data extraction becomes much more efficient. Its ability to adjust to website changes ensures consistent performance, making it a dependable choice for businesses that rely on accurate, ongoing data collection.

FAQs

How can I ensure accurate data when scraping JavaScript-heavy websites?

To gather reliable data from websites that heavily rely on JavaScript, tools like headless browsers (such as Puppeteer or Selenium) can be invaluable. These tools mimic real user behavior, allowing dynamic content to load and ensuring the data you collect accurately reflects what users see on the site.

For even greater accuracy, you can intercept network requests to pull data directly from APIs. This approach avoids potential issues with rendering and provides cleaner, more precise data. Additionally, incorporating strong error-handling methods - like using regex patterns or predefined value lists to validate your data - can help maintain consistency and quality. These strategies ensure your data is dependable and ready for meaningful analysis.

What are the best practices for managing proxies to prevent IP blocking when scraping dynamic websites?

When scraping dynamic websites, avoiding IP blocking is crucial, and a proxy rotation strategy can be your best friend. This means using a pool of proxies that automatically change IP addresses after each request or after a certain number of requests. By doing this, you lower the chances of being flagged and keep your data extraction process running smoothly.

It’s also essential to keep an eye on your proxies' performance. Regularly check for any proxies that might be blocked or compromised, and replace them as needed. Choosing high-quality proxies with solid metadata can also help reduce the risk of detection. By combining these techniques, you can ensure uninterrupted access and a more reliable scraping experience.

What ethical and legal guidelines should I follow when scraping data from dynamic websites?

When working with dynamic websites, it's crucial to prioritize ethical practices and follow legal guidelines. Always honor the website’s terms of service and steer clear of gathering personal, sensitive, or copyrighted information unless you have explicit permission. Using a transparent and accurate User-Agent string is another way to show responsible behavior.

In the U.S., scraping publicly available data is generally permitted. However, ignoring terms of service or extracting protected content without proper authorization can lead to legal trouble. To stay on the right side of the law, take the time to review the site’s terms, avoid accessing restricted or private data, and respect technical barriers like robots.txt files. Keeping up with relevant laws and best practices will help you navigate these activities responsibly.

Related Blog Posts