Optimizing HTML Parsing for Large Datasets

September 11, 2025

When dealing with massive HTML datasets - like thousands of e-commerce product pages - standard parsing methods often fail due to memory overload, missed dynamic content, and inconsistent HTML structures. Optimized parsing is essential to ensure accurate, real-time data extraction for tasks like pricing analysis, inventory tracking, and customer experience improvements.

Key Optimization Techniques:

- Chunking: Process HTML in smaller batches to reduce memory usage and improve error handling.

- Lazy Loading: Extract only essential data (e.g., prices, stock) instead of loading entire pages.

- Parallel Processing: Leverage multi-core CPUs to handle multiple tasks simultaneously for faster results.

- Efficient Data Structures: Use hash maps, arrays, sets, or trees based on specific parsing needs.

- Memory Management: Implement streaming parsers, object pooling, and memory profiling to prevent crashes.

Practical Applications:

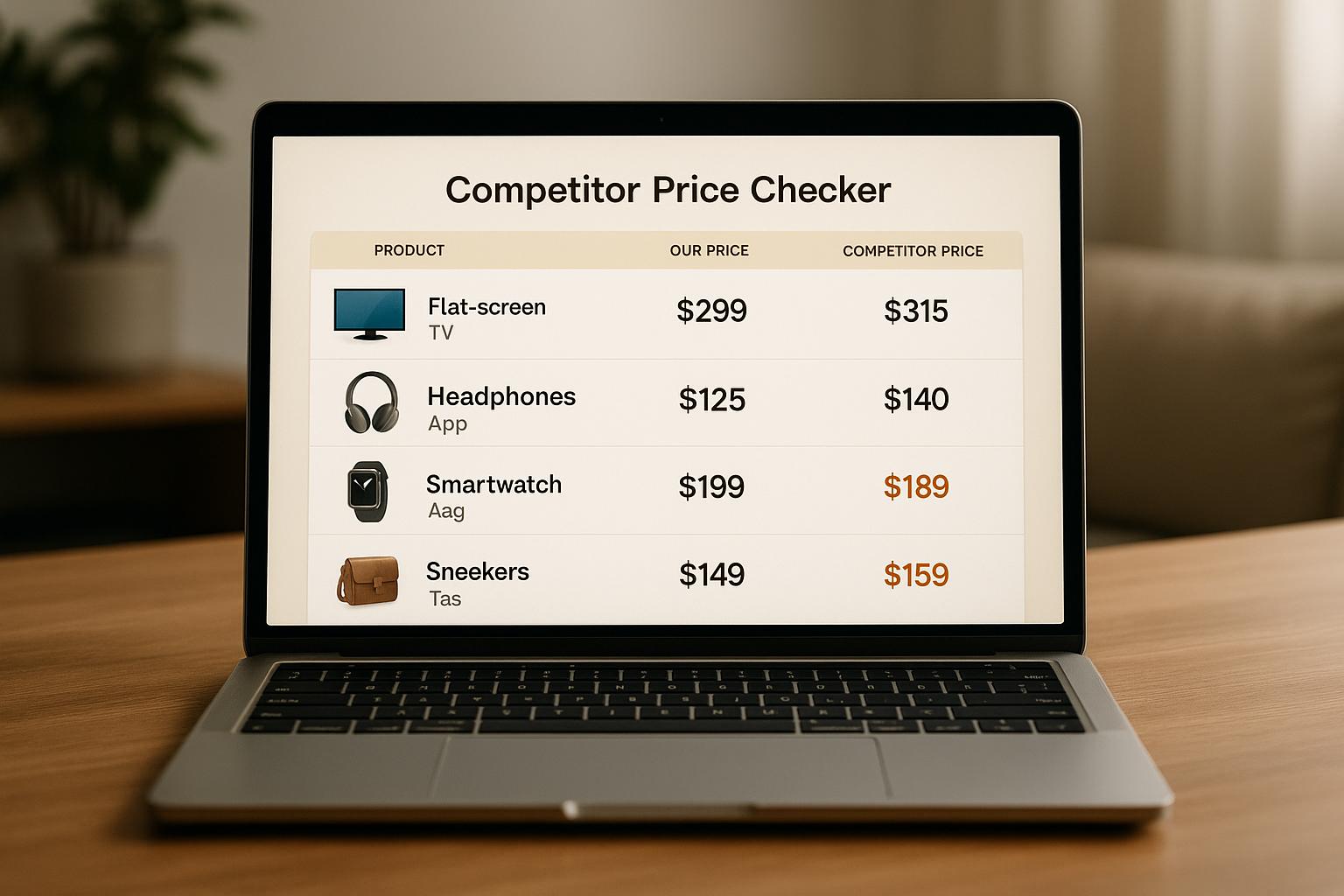

- Price Monitoring: Extract U.S. price formats ($29.99) and stock data efficiently.

- Product Details: Focus on critical data first, like names and availability, while deferring less urgent details.

- Seller Info: Parse ratings and shipping policies quickly using parallel processing.

- Search Results: Handle paginated content with chunking and lazy loading for smoother operations.

For businesses, tools like ShoppingScraper integrate these techniques to handle large-scale e-commerce data scraping with features like automated scheduling, proxy support, and flexible data export options. Plans start at $1.09/month for basic use, scaling up to $817/month for enterprise needs, supporting up to 500,000+ requests.

Efficient HTML parsing isn't just about speed - it ensures timely, accurate insights that can improve decision-making and competitiveness in a fast-paced market.

Main Techniques for Faster HTML Parsing

Speeding up HTML parsing often comes down to a few key methods that can drastically improve how your system handles large datasets. These aren't just minor tweaks - they can significantly cut down processing times while keeping memory usage in check.

Chunking

Breaking large HTML files into smaller, manageable pieces is a game-changer when working with hefty datasets. Instead of trying to process an entire product catalog in one go, chunking allows you to handle data in smaller, more digestible batches, easing the load on your system.

Here’s how it works: large HTML documents or collections of pages are divided into predefined segments. So, instead of parsing thousands of product pages all at once, you process them in smaller groups. This makes memory usage more predictable since each batch uses a set amount of memory, which is released before moving on to the next batch.

The ideal chunk size depends on your system's resources and the complexity of the HTML. For instance, simpler pages with product listings can handle larger batches, while more intricate pages loaded with JavaScript might need smaller chunks. Experimenting with different sizes can help you find the sweet spot for your needs.

Another advantage? Chunking makes error handling easier. If one batch runs into issues like malformed HTML or network errors, it doesn’t bring the entire operation to a halt. You can retry or skip problematic chunks and continue processing the rest of the data smoothly.

Once you've optimized memory with chunking, lazy loading takes efficiency a step further.

Lazy Loading

Lazy loading is all about focusing on what you actually need, skipping the overhead of loading entire pages. Instead of pulling in every bit of data, you extract only the essentials - like prices or stock availability - right as the content streams in. This is especially handy for e-commerce scenarios where you're often dealing with thousands of product pages.

The technique involves identifying the specific HTML elements you need before starting the parsing process. By targeting elements like CSS selectors or XPath expressions, you avoid loading unnecessary content, which saves bandwidth and speeds up processing.

For example, if you're tracking competitor prices in the U.S., lazy loading lets you zero in on price tags and stock indicators without downloading entire pages filled with reviews, product descriptions, and other extras. It also works well in paginated setups, common on e-commerce platforms. Instead of loading all search result pages at once, you process one page at a time, grabbing the product links you need before moving to the next.

Lazy loading keeps memory usage low while ensuring you get the data you need. To take things even further, parallel processing can supercharge your parsing speed.

Parallel Processing

When dealing with high volumes of data, parallel processing can make a huge difference. By distributing tasks across multiple CPU cores, you can significantly speed up parsing for large datasets. Many modern servers have several cores, but traditional parsing often uses just one, leaving a lot of processing power untapped.

Here’s how parallel processing works: tasks are divided among available CPU cores. For instance, one core might handle electronics product pages, another could take on clothing items, and a third might tackle home goods. This setup allows you to process data much faster, especially on multi-core systems.

Each thread works independently, and modern programming languages often include threading libraries that simplify the process. This approach is particularly effective for parsing product catalogs, where each page can be processed separately. For tasks like competitive pricing analysis, where speed is critical, parallel processing ensures you can gather data from thousands of products quickly.

To avoid bottlenecks, dynamic resource management is key. Database connections, API calls, and file access need careful coordination. Tools like connection pooling and rate limiting help ensure that your parallel parsing operations don’t overwhelm external systems or hit API usage limits.

Better Data Structures and Memory Management

When working with techniques like chunking and lazy loading, selecting the right data structures and managing memory effectively are crucial for maintaining solid HTML parsing performance. The right choices can prevent excessive memory usage, especially during large-scale parsing tasks.

Choosing the Right Data Structures

The data structures you choose can make or break your parsing efficiency. Here’s how to match them to your needs:

- Hash maps are perfect for O(1) lookups, like tracking duplicates or indexing product IDs across thousands of pages. For example, in large e-commerce catalogs, where the same product might appear on multiple pages, hash maps allow rapid lookups without scanning entire lists.

- Arrays are a great fit when maintaining order is important, such as preserving the sequence of search results or product rankings. They're also memory-efficient for simpler data types. However, if your parsing process involves frequent additions and deletions, dynamic arrays or linked lists are better suited, as they avoid the overhead of resizing.

- Sets are ideal for automatic deduplication. When parsing product listings that appear across multiple category pages, sets can eliminate duplicates on the fly, saving both time and memory compared to manually cleaning up duplicates later.

- Trees work well for hierarchical data, like category structures or nested product attributes. They allow for efficient searching and sorting while keeping relationships between data elements intact, making them invaluable for parsing complex e-commerce datasets.

Matching data structures to specific access patterns is key: use hash maps for frequent lookups, trees for sorted or hierarchical data, and arrays for sequential processing. These choices build on chunking and lazy loading methods to ensure smoother handling of large datasets.

How to Manage Memory Usage

Memory management is critical when processing gigabyte-scale HTML data. Here are some strategies to keep memory usage under control:

- Streaming parsers process data in smaller chunks instead of loading entire documents into memory. This keeps memory usage predictable no matter the file size.

- Use object pooling to avoid the overhead of repeatedly creating and destroying objects. For instance, if you’re parsing similar structures like product cards or price elements, reusable object pools can significantly cut down on memory usage.

- Lazy evaluation helps by deferring expensive operations until they’re absolutely necessary. For example, if you only need prices and availability, skip parsing and storing product descriptions to save both memory and processing time.

- Implement fixed-size buffers and schedule regular cleanups to maintain a stable memory footprint during extended parsing sessions.

- Add circuit breakers for memory usage. If memory consumption crosses a predetermined limit, temporarily pause parsing to allow garbage collection or run emergency cleanup processes, preventing out-of-memory errors during heavy loads.

- Regular memory profiling is a must. Tools designed for memory analysis can pinpoint bottlenecks and leaks early, helping you address issues before they escalate. Continuous monitoring during parsing ensures that memory usage spikes don’t catch you off guard.

Using These Optimization Techniques in Practice

To effectively use techniques like chunking, lazy loading, and parallel processing, it’s essential to understand how they align with your development goals and how to implement them within your technology stack. The trick lies in tailoring these strategies to your specific parsing needs.

Implementation in Popular Frameworks and Languages

Python offers excellent tools for incremental parsing. For instance, you can use BeautifulSoup with lxml to process HTML in chunks without loading the entire document. To handle tasks in parallel, Python’s multiprocessing module can distribute workloads across CPU cores, while asyncio is perfect for managing concurrent I/O operations efficiently.

Node.js thrives in concurrent environments thanks to its event-driven architecture. Use cheerio for HTML manipulation and streams for chunked processing. Dynamic imports allow you to load modules only when needed, reducing memory usage. For heavier tasks, worker threads can offload parsing, ensuring the main thread remains responsive.

Browser applications can leverage Web Workers to handle resource-intensive tasks in the background. Combining code splitting with the Intersection Observer API lets you trigger parsing only when necessary, such as when content becomes visible on the screen.

Other strategies, like object pooling (to reuse parser objects instead of creating new ones), fixed-size buffers for predictable memory usage, and streaming parsers, can help manage resources effectively, even when dealing with massive inputs.

HTML Parsing Examples for US E-Commerce

These optimization techniques shine in practical scenarios, such as parsing HTML for US-based e-commerce sites.

Price Extraction

When pulling price data from US marketplaces, it’s important to account for formats featuring the dollar sign ($), commas as thousand separators, and decimals (e.g., $29.99). Many pages display both regular and discounted prices, so lazy loading can delay detailed parsing until users request it. This allows you to capture essential data like availability and primary pricing quickly.

Product Detail Extraction

For large product catalogs, start by parsing critical details like product names, main images, and availability. Additional details - such as specifications, reviews, and related products - can be parsed later using lazy loading as users scroll to those sections. This staged approach minimizes memory demands while keeping the user interface snappy.

Seller Information Parsing

Parsing seller details often involves working through nested HTML structures to find business information, ratings, and shipping policies. Parallel processing is particularly helpful here. For instance, you can extract ratings and shipping details for multiple sellers simultaneously, significantly speeding up the process even when dealing with thousands of entries.

Search Results Handling

Search result pages are ideal for showcasing the combined power of these techniques. Start by chunking the initial 20–30 results to manage memory efficiently. Use lazy loading for product images by adding the loading="lazy" attribute, and employ the Intersection Observer API to parse additional listings as users scroll. Meanwhile, background threads or Web Workers can handle more intensive tasks like extracting detailed product information, prices, and seller data.

To get the best results, focus on the biggest bottlenecks - whether it’s memory usage, speed, or user experience - and layer in additional techniques progressively.

sbb-itb-65e392b

Real-Time E-Commerce Data Scraping with ShoppingScraper

Building a robust scraping system takes more than just basic HTML parsing - it demands serious resources and smart strategies. That’s where ShoppingScraper shines. By using techniques like chunking, lazy loading, and parallel processing, this platform handles the complexities of large-scale HTML parsing with ease. These methods are baked into ShoppingScraper, creating an efficient, ready-to-use solution for large-scale data scraping.

ShoppingScraper Features

ShoppingScraper is designed for real-time, scalable data extraction, which is essential in fast-moving markets where prices can change in an instant. By leveraging advanced optimization techniques, it ensures smooth and efficient e-commerce data scraping, even on a large scale.

Here’s what makes ShoppingScraper stand out:

- API Integration: Easily connect the platform to your existing workflows for seamless operation.

- Automated Scheduling: Schedule scraping tasks at the best times without manual intervention.

- Market Coverage: Extract data from major platforms like Google Shopping and other key marketplaces.

- Detailed Data Extraction: Gather comprehensive details, including product images, specifications, prices, availability, seller ratings, and shipping policies.

- Flexible Data Export: Export your results in formats like JSON or CSV to suit your needs.

- Global Proxy Support and Advanced Filtering: Bypass geo-restrictions and rate limits while narrowing down data to specific product categories or price ranges.

ShoppingScraper for US E-Commerce Businesses

For U.S. businesses, staying competitive often means keeping up with accurate pricing data and meeting marketplace standards. ShoppingScraper helps tackle these challenges by tailoring extracted data to U.S. formatting conventions, making it easier to use right out of the box.

The platform offers pricing plans to fit businesses of all sizes:

- Hobby plan: $1.09/month for basic testing, with 100 requests per month.

- Growth plan: $217/month, including 50,000 requests, access to the web app, Google Sheets integration, and support for two marketplaces.

- Advanced plan: $435/month, offering 150,000 requests, two user accounts, and access to three marketplaces.

- Enterprise tier: $817/month, supporting 500,000+ requests, five user accounts, and integration with five marketplaces.

ShoppingScraper also simplifies scheduling by automating scraping tasks across U.S. time zones. This ensures compliance with rate limits and avoids downtime during maintenance windows, making it a reliable choice for businesses operating in dynamic e-commerce environments.

Conclusion: Main Points for HTML Parsing Optimization

Review of Optimization Techniques

Optimizing HTML parsing effectively involves a mix of techniques like chunking, lazy loading, and parallel processing to ensure systems remain responsive. Chunking breaks down large datasets into smaller, more manageable parts, preventing memory overload. Lazy loading processes data only when needed, saving both time and computational resources. Meanwhile, parallel processing taps into the power of multi-core systems, allowing multiple parsing tasks to run at the same time.

The choice of techniques should align with the specific needs and limitations of your system. Proper memory management and selecting the right data structures can mean the difference between a system that scales efficiently and one that falters under heavy workloads. Tailor your approach based on factors like data volume, processing frequency, and system constraints. These strategies form the backbone of the performance improvements seen with ShoppingScraper.

How ShoppingScraper Supports Real-Time E-Commerce Data Scraping

ShoppingScraper incorporates these optimization techniques to handle large-scale HTML parsing challenges while ensuring data is formatted to meet U.S. standards and real-time demands. This allows businesses to focus on analyzing insights rather than worrying about the technical infrastructure.

The platform extracts detailed product information such as prices, specifications, and seller details, all formatted specifically for U.S. requirements. This includes proper use of the dollar sign ($), date formats like MM/DD/YYYY, and number formatting (e.g., 1,000.50). Major marketplaces, including Google Shopping, are supported.

For businesses of varying sizes, ShoppingScraper offers flexible pricing plans. The Growth plan costs $217 per month and efficiently handles up to 50,000 requests. For larger needs, the Enterprise plan at $817 per month is designed for over 500,000 requests. This scalability is powered by the integration of chunking, lazy loading, and parallel processing techniques discussed earlier.

On top of that, ShoppingScraper’s automated scheduling feature optimizes task timing. By scheduling parsing during off-peak hours and adhering to rate limits, the platform ensures high-quality data collection while minimizing the risk of being blocked by target websites.

FAQs

How does chunking help with memory management and error handling during HTML parsing?

Chunking helps with memory usage and error management by dividing large datasets into smaller, easier-to-handle parts. Instead of trying to load an entire dataset at once, chunking processes the data step by step, which lowers the chances of overwhelming your system's memory.

It also makes spotting and fixing errors simpler by containing problems within individual chunks. This approach streamlines parsing, making it more efficient and dependable - particularly useful when dealing with massive HTML datasets.

How does parallel processing improve HTML parsing for large datasets?

Parallel processing improves the efficiency of HTML parsing for large datasets by splitting tasks across several CPU cores. By working on multiple chunks of data at the same time, it dramatically cuts down the time needed for processing.

Beyond just speeding things up, this technique makes better use of system resources, which is a big plus when scaling up for large-scale web scraping projects. For businesses managing massive amounts of data, this method ensures quicker and more effective outcomes.

How can I integrate ShoppingScraper into my workflow for real-time e-commerce data scraping?

You can bring ShoppingScraper into your workflow through its RESTful API, which enables smooth, real-time data extraction. The tool offers features like automated scheduling and lets you export data in JSON or CSV formats. This flexibility makes it a great fit for tasks such as inventory management, pricing strategies, or competitor analysis.

By automating the data collection process, ShoppingScraper simplifies your operations, saves time, and ensures you always have the latest information to make more informed decisions.

Related Blog Posts